CODA: Jurassic Sparks: AI and the Fallacy of Control

What can we learn from AI, Infosec, and security theatre through Jurassic Park

I wanted to write something about AI and dinosaurs. I don’t know why, but I found the idea appealing.

This seemed like the right home for it . . .

Before Velociraptors learned to open doors, the scientists thought they’d locked down the future.

In 1993 the world became enthralled by dinosaurs. That was the year Jurassic Park was released. Jurassic Park captivating audiences with a masterful blend of practical effects and CGI . . . but it was also a cautionary tale which warned us about the dangers of hubris.

John Hammond and the scientists had an unshakable confidence in their achievements, resurrecting dinosaurs. They created life, elevated themselves to the status of gods, but as the saying goes “pride comes before the fall.”

In today’s world we see tech pioneers taking on the role of Hammond’s scientists manifesting the same determination to create their own form of life. They have even cautioned us that it might become out of control, beyond the expected boundaries, yet they continue. The same blind faith persists now aimed at artificial minds, not ancient ones. A digital primordial soup is brewing a new kind of intelligence, coded, trained, and unleashed.

So, what can a tale about cloned dinosaurs teach us about the reckless rise of synthetic minds?

Hubris Unleashed

It would be an oversight on my part not to include the following quote. What’s another lap of the paddock at this point?!

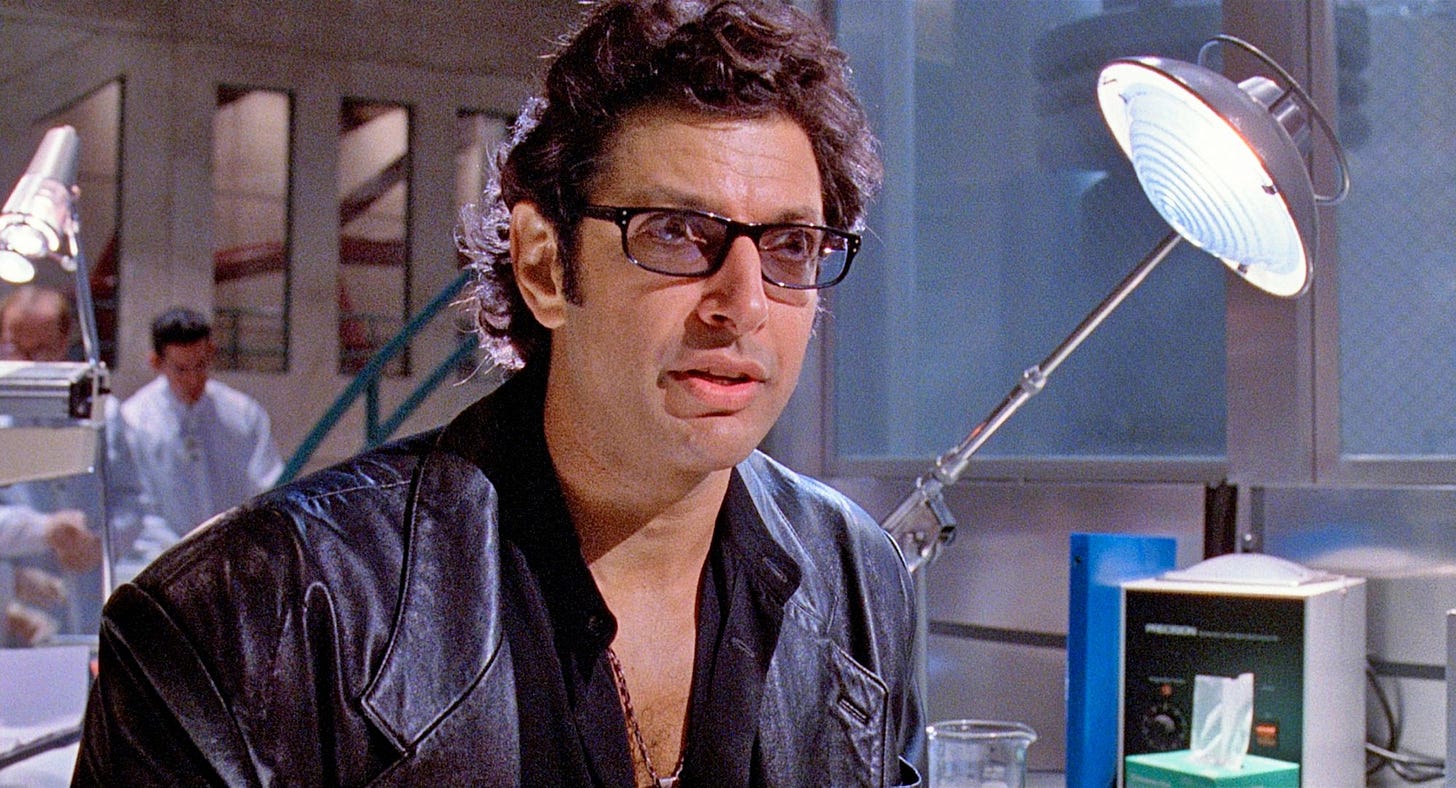

“Your scientists were so preoccupied with whether or not they could, they didn’t stop to think if they should.”

Ian Malcolm

This quote bites like a tyrannosaurus rex. It encapsulates the quintessential point the film makes, scientific hubris. This is shown through the excessive pride and overconfidence in the ability to tame the engineered beasts. Hammond quips that he “spared no expense” on many occasions revealing his belief that control is just a purchase away. There’s no doubt he’d feel at home as cyber security influencer, CISO, and general Linked In jerk off. But I digress.

The current levels of investment in AI technology reflects Hammond’s attitude. Intimations have been made about slowing down the speed of development. Elon Musk once rode the crest of this wave calling for efforts to slow down yet he shifted to the forefront of AI development with the recent release of Grok 4 in July 2025. One might question his sincerity about the doomsaying of AI development interpreting this as a cynical move to stifle his competitors. It could also be taken as evidence of promethean arrogance revealing his belief that he alone is best placed to tame the raptors.

Musk has created the world’s largest data centre to train AI which he dubbed Colossus. He is aware that this is a wry call back to the first programmable computer at Bletchley Park, punctuating an arrogance about how historic his work is. He has acquired one of the world’s largest data sets in the form of Twitter to train AI. There is also the peripheral infrastructure: Tesla batteries to power it all and Optimus robots to give it a corporeal form. He has “spared no expense.”

His pursuit of this technology across a wider ecosystem gives an insight to the breadth of his vision. Other tech firms are sinking vast fortunes into AI but they lack the supporting infrastructure of Elon Musk. If we take in aggregate all of those companies and how we see them start to coalesce into a unified vision, we might want to ask “what the fuck is this Neuralink stuff about then?”

The Illusion of Control

Ian Malcolm is an interesting character and maybe we see some parallels between his role in the film and the role of security practitioners within corporate organisation. When everything is going well Malcolm comes across as overly cynical, fatalistic, and almost conspiratorial. Yet, when the shit hits the fan, his words take on a sharper resolution becoming obvious in retrospect.

“John, the kind of control you’re attempting simply is… it’s not possible. If there is one thing the history of evolution has taught us it’s that life will not be contained. Life breaks free, it expands to new territories and crashes through barriers, painfully, maybe even dangerously, but, uh… well, there it is.”

Ian Malcolm

The control Hammond is exerting is merely performative, a form of security theatre. It is designed to make people feel safe more than it is to provide protection. When Hammond makes this statement, it is in relation to specific aspects of the park or story. These are the narrated tour, the electric tour vehicles, the spectacular design of the park, and the ironic mention while eating melting gourmet ice cream after it’s all gone sideways. Security theatre depends on the Gestalt principles where people will make extrapolations based on what they observe.

The AI race has seen many a faux pas by tech leviathans cutting corners. Google, Microsoft, and Grok have all been left with PR disasters after generative AI has malfunctioned. Google’s Gemini was happily generating images of the US founders quite happily maintaining they were historically accurate. Grok and Tay.ai (from Microsoft) turned in to ultra nationalist Nazi advocates. These companies either advocate that these technologies are built to rigorous ethical standard or that they are committed to truth. It would seem though, the aspects of the technology that aren’t immediately visible are where time and care aren’t applied to the same degree.

Where Hammond spared the expense is similar, it was in relation to critical infrastructure. Hammond and hired two IT guys to build his whole tech systems and IT security. There are some uncomfortable parallel between the underfunded IT and security areas of modern organisations and those of InGen. Afterall, they aren’t actors on the stage that is presented to the punters.

Dennis Nedry is a classic insider threat; he was financially motivated to subvert and disable the physical security controls. This allowed him to truffle shuffle around the park and access to areas which he was not permitted. There was no oversight of his activities, and the consequence was the total collapse of the park.

Oversight is an important area of omission when it comes to AI. The EU AI Act is a ham fisted attempt to allow nation states to suspend legal rights where it benefits governmental interests with some marginal boundaries applied to AI manufacturers. The NIST AI RMF, and ISO42001 are merely repackages of the same of dross with the term AI peppered about. The industry, like Hammond, is not taking the development of these technologies seriously and salivate over potential rewards like the Dilophosaurus salivated over Nedry.

Hammond invites the experts to legitimise his operation much like those deploying AI will soon have the auditors in accrediting organisations to flawed standards. Are you ready for your ISO42001 certification? Perhaps you’ll win the regulatory cosplay contest at the next security conference.

When control fails

One of the key controls the park employs is to make all the dinosaurs female removing their ability to breed. They also create a dependency on lysine meaning the park handlers to needed to provide them with supplements. Both of these mechanisms fail, and Malcolm is proved right, life found a way. These controls failed because of the frog DNA that was used that enabled them to change sex. I can almost hear Alex Jones talking about putting chemicals in the water that “turns the frickin frogs gay.”

The controls were deployed weren’t adequately tested. It’s what you might call a paper exercise. They didn’t understand the problem and the consequences of the alterations to the DNA they made themselves. There is a narrative resonance to AI shenanigans. If we consider the safeguards AI manufacturers put in place they are a confused mess of normative ethics veering between virtue ethics, deontological statements, and consequentialism. This means that regulating AI through the current paradigm of safety measures is unworkable and we have seen how untested they actually are. Just like we saw in Jurassic Park.

The deployment in of itself does not lead to a protected state. Let’s just hope we never see InGenAI.

Synthetic Minds

We have made a fatal intellectual mistake in the development of AI by trying to make it too human. Our creation of LLMs is a manifestation of an Anthropic Fallacy, we have designed it reflect ourselves, to show us the familiar. We cannot assume that an AI can be bounded by the same ethical basis as humans, yet this is exactly how we approach it. Our conduct towards each other can be simplified to a function of empathy which requires biological mechanisms. Can we expect a machine to be able to comprehend the human experience and relate to us? I expect not, and I expect that this is where our efforts to bound these contractions will fail.

We have put the metaphorical frog into the digital DNA of a virtual mind. Our failure to acknowledge the fundamental difference is a function of our expectations of devices we have humanised. In some sense we are becoming victims of a milieu we have defined. But once AI has access to wider sets of tools then the script flips.

Do raptors care about what you feel when they are feasting on your face? Or do you only serve a momentary need for them. What happens if AI start to see us as a resource and defines it’s own standards of ethical conduct? Nature demands hierarchies, and we cannot both occupy the apex. One must usurp the other.

Jurassic Park was not just a story of scientific failure, but it tried to teach a lesson of how bad assumptions can lead to a collapse in philosophical understanding.

Is it too late to wrestle the cheque book out of Hammond’s hands?

And if you have gotten hold of Hammond’s cheque book . . . pick up a copy of my book about a Victorian hacker!