CODA: When AI meets meme culture

A reflection of the Studio Ghibli fiasco

Note

This was written prior to my discontinuation of the blog. I have about a dozen or so nearly complete articles that weren’t published as the connection to security was tenuous at best. They are more commentary on technology, social trends, or pushing towards pure philosophy or psychology.

I might, on occasion, finish them off and publish them or take them forward to a new project.

Introduction

AI recreations of photos in the style of Studio Ghibli has been one of the most recent trends. Online trends have always been ‘a thing’ and meme culture is alive and well. Richard Dawkins coined the term meme in his book the Selfish Gene and described it as a “unit of cultural transmission,” I’m not sure what happened is what he had in mind.

In the early days of the internet there were the “What Star Wars Character are you” quizzes, profile pictures created in the style of South Park, political compass tests . . . there was an innocence to this time but at the back of it all there was the seedy undertones that existed on 4chan and rotten.com. Social media fads have always been there. Who remembers chain e-mails? If you don’t then you need to share this article five times or you’ll receive bad luck for 10 years.

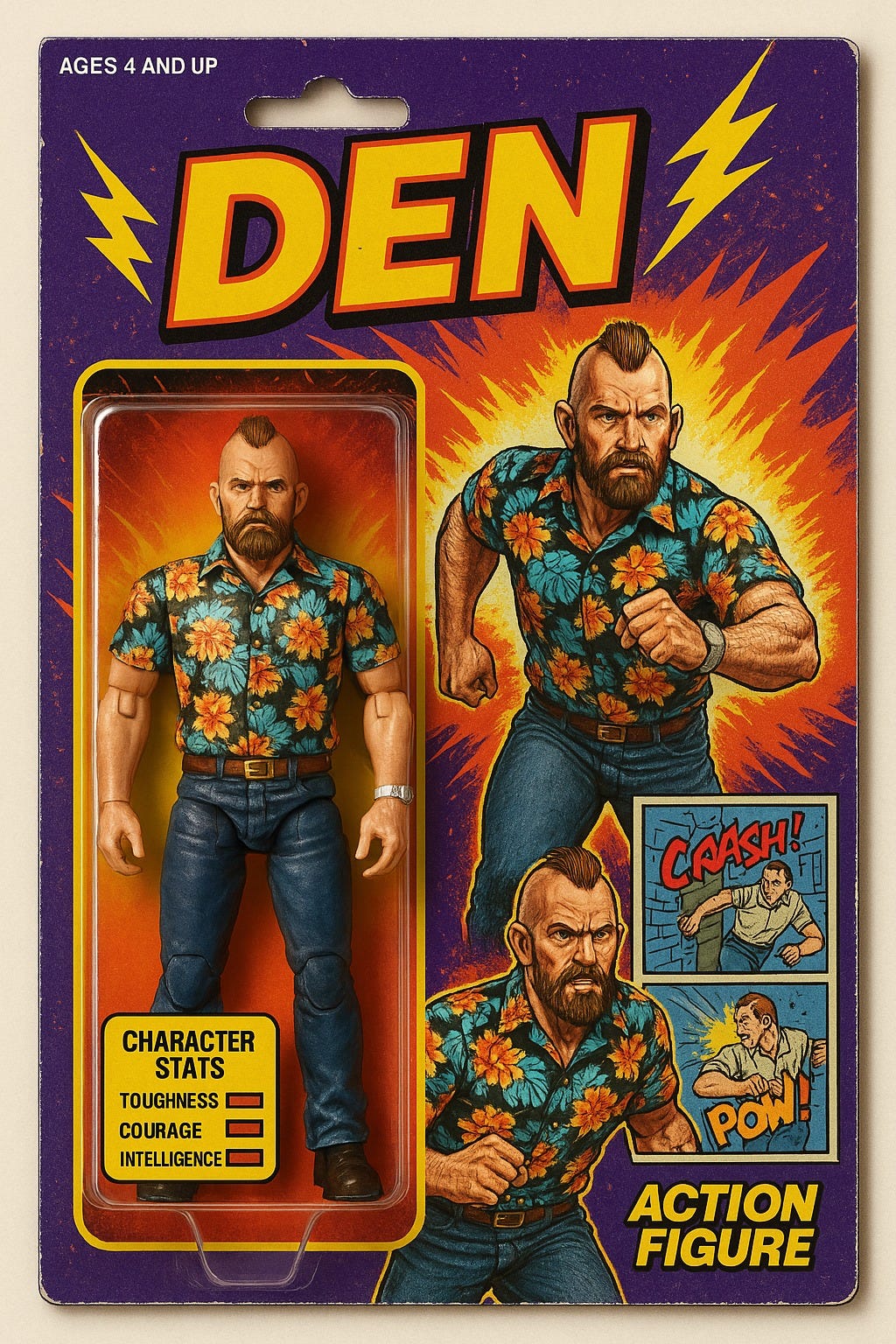

But lately the Ghibli hype came, shortly after the action figure fiasco. Of course I got on board with the action figure one but I went for a badass 80’s style action figure rather than the nauseating corpo drivel.

But with the trend to make pictures in the style of Studio Ghibli came the baying mobs looking to piss on everyone’s chips. You might think I’m aggrieved by not being the one pissing on said chips but I’ve found the whole thing very amusing.

It got out of hand very quickly

Well, the problem is that in sanitised spaces such as LinkedIn, people don’t really talk about the ‘spicey’ end of online life.

You might recall a few years ago that a YouTuber, Yannic Kilcher, trained an LLM using a dataset of 4Chan’s /pol/ board. He released the LLM back onto /pol/ to see what would happen. Eventually the commentors clocked that an AI was posting but for a time, the illusion held. What was really interesting, and fairly amusing, is that the LLM engaged in the speculation about who was an AI.

The GPT model trained on the 4Chan data scored significantly higher on the TruthfulQA benchmark than most of the GPT models of the time even though it was created to deliberately degenerate. That seems to be a stark reflection on contemporary discourse.

But the Ghibli trend very quickly took a dark turn. The internet might have taken things too far and controversial images started appearing.

The ethical quandary about training AI

There is, of course the discussion about having used Ghibli’s work to train AI. The obvious point about using copyrighted works has been made extensively with the note that a style cannot be copyrighted. Copyright prevents others from copying, distributing, adapting (i.e. turning a film into a novel), publishing, or leasing a work.

Well, there is an debate as to if tokenising and processing into an LLM would constitute adaptation or copying a work. The work is not directly copied but it could be argued that an output is derivative, like a remix, which would need to be authorised by the copyright owner. A work being transformative or derivative is a factor with the former being permissible under US copyright law for example, and the latter not such much. Advocates of AI could also stretch a fair use argument but they would be clutching at straws.

There is an interesting aspect to consider. If a human consumes books, films, music, or any media in general and is inspired by that work . . . well, to what extent is that transformative or derivative? Would it be acceptable to apply that standard to AI technologies if the copyright for the output is owned by a human?

We tend to anthropomorphise these types of technologies by virtue of how they are constructed. We try to emulate our values within their processes which is to be expected but it blurs the line between it being a tool and something more. In this sense, we view a drawing or a written work as the output of the human but tools are required for this purpose. Is AI just another tool and the human operator is the owner of the work and responsible for copyright considerations?

It is the learning element of the tool that confuses the picture though. It requires input from copyrighted works to achieve the output. But is it little more than a measurement? What is clear is the current laws pertaining to copyright do not adequately consider the applications of this new technology.

It is the case that training an AI on copyrighted works does not harm the creator. The harm occurs when the creator is determined by the output of the AI tool. The obvious solution then is to legally define the lines of responsibility between the AI provider and the AI user and allow equivalent mechanisms for creators to seek recourse against them. Perhaps one practical means would be to allow for creators to seek recourse in the jurisdiction where the work was created rather than in where the work was violated. On the surface of it, enhancements to mechanisms such as the Berne convention and the TRIPS agreement may be a practical solution to that problem.

Other issues arise such as equivalence in enforcement and damages awarded for infraction which might make it prone to abuse against AI developers. Unscrupulous creators might also seek to abuse jurisdictions where the most severe damages apply if equivalence in penalties is not achieved however there is a natural asymmetry in that AI creators are highly funded so may operate in areas where they can price people out of making any legal challenges. Updating Berne and TRIPS could be a stepping stone but they are limited by being territory based. An international consensus may need to be established but this would disadvantage those who subscribe against those who do not and may impose an excessive burden on AI developers.

Style

Some have been feeling aggrieved that the Ghibli style has been copied however it doesn’t seem like they understand the Ghibli style. It’s not just about the visual presentation rather it is about the interplay of the visual style, composition of the elements in the frame and importantly the music.

To illustrate the point the the totality of the work was highly important to the integrity of piece, when Miyazaki’s “Princess Mononoke” was being released to the US market Harvey Weinstein was given the task. Weinstein met with Miyazaki who had insisted on making several edits to the film. In a story that has achieved folklore status, Weinstein subsequently received a Katana with a note that just read “no cuts.” Miyazaki said the following in an interview in 2005,

Actually, my producer did that. Although I did go to New York to meet this man, this Harvey Weinstein, and I was bombarded with this aggressive attack, all these demands for cuts." He smiles. "I defeated him."

The still images being generated by AI in the Ghibli style are not the Ghibli style as they lack all the components that emote the feeling and resonance with the audience. I’d go as far as to argue that the drawn style of Ghibli in of itself isn’t particularly unique so the criticisms of AI ripping it off aren’t particularly compelling. It might even be considered an insult to the Ghibli style to label AI creations as such given the many other contingent elements.

We are left with a question, is the output of an AI model a legitimate creative expression? Using a hammer to whack in a nail isn’t typically considered art (some people would but they aren’t people worth knowing), so is the consequence of a tool without direction creative expression at all, or does it require something else? An image in the visual style of something does not necessarily provoke an emotional response which may be considered a standard for creative expression. It requires that emotion and consideration are imbued within its construction. An AI alone does not achieve this.

But alas, nuance is not something that is embraced readily.

A lot more moronic commentary about AI

One of the most common and tenuous objections was to say that Studio Ghibli’s creator, Hayao Miyazaki, regards AI as an “insult to life itself.” Some even extended this to use of CGI although fail to mention that Studio Ghibli have been using it in their films for decades, Princess Mononoke had 10-15 minutes of CGI scenes and that was in 1997. But the use was always subtle and had to align to the aesthetic of the piece and not be the central element. Ghibli embraced technology as a tool.

Well, it’s true, he did say the “insult to life itself” but let’s be clear about context. The comment comes from a video in 2016 where Miyazaki was shown an animated video by students. This is the video he was shown,

It is clear why Miyazaki made this statement in response to this video but the yapping terriers of stupidity that flood LinkedIn repeated his line without even watching the video in which it was made. They took it as an hard line position about current technology even though it was made before it LLMs and Generative AI were common place. In the context of where the line was said, it is easy to sympathise with Miyazaki’s visceral response but he was not right to chastise them so severely.

There has always been an ongoing tension between the unsavoury aspects of the internet. The seedy places that elicit disgust and skirt the line of acceptability. There is a place for exploration of darker concepts. Miyazaki was harsh in his critique of the students who presented the AI abomination however his purpose was not their purpose.

Conclusion

As the chattering classes seek to sanitise the discourse with faux outrage they contort reality and twist context into something else. Bait to garner engagement, repetition of acceptable perspective. They have emulated the filters that are applied to LLMs to spare the feelings of the weak and apply it to themselves. This only serves to remove nuance from the conversation.

Nuance is something that is going to be required if we are to understand the boundaries of copyrighted data use in AI technology and how the legal structure can be updated to protect both creators and AI developers whist considering the global implications of applying such constraints.

And this is the problem with much of the discourse, it fails to acknowledge that the real world that exists and promotes an idealised one.